I’ve never really done any of these yearly retrospectives before about my own work and career progress, mostly because I’ve never had anything that noteworthy to write about.

Following your normal career path is not really anything to write home about, but, I guess it’s more about what you do and how you do it that will matter most for your own career growth.

In that sense, I would easily elect 2024 as my “first real career year” in programming. The year when I had to go above and beyond because the circumstances sort of demanded it.

With the whole GenAI boom, my job was no different from the rest of the industry and, in fact, we had had a sort of “proof-of-concept” with integrating LLMs into our core products in 2023, and, the results were actually decent, so I internally pushed forward to get a sort of permanent team assigned to “working on AI initiatives”.

Alas, my prayers were heard, and, in March 2024, we got an official team set up with the narrow scope of “extending our core platform by enhancing it with AI-powered features”.

Since I had been a vocal pusher for this, it was nice to have the opportunity to really see how far this could be pushed from a POC to something running in production. This was exciting because there was nothing at all. No repo, no team name, no concrete deliverables, no business objectives. Zero, zits, nada. It started with choosing a team name and having a handful of very high level ambitious goals to reach “as time passed” so to say. This ended up teaching me a lot about a lot of stuff.

Greenfield projects are awesome!

There’s a huge difference between working with something and creating something.

Sure, it’s easy to work on small features on a big existing project, because things are just there.

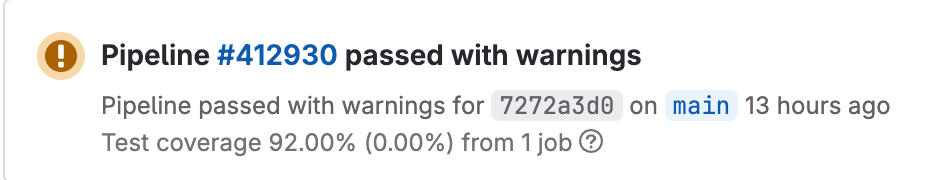

Pipelines are there, code is there, examples are there, tests are there. A lot of the work is just pattern matching and extending an existing structure to support “new feature X” or “extend capability Y”, which is interesting and nice, but, you leave a lot of learning on the table if that’s all you do.

When you start with a blank sheet, no repository at all, you surface all the complex details of your infrastructure that you didn’t know you needed in the first place. It becomes a completely different beast and you are forced to level up. By teaming with my platform colleagues I ended up learning a lot about Gitlab pipelines, Kubernetes, Docker and multi-project pipeline dependencies that I doubt I’d ever even see or need had I stayed doing the same thing over and over. It was effectively a huge learning opportunity to build something from thin air and I’m super glad I took it.

You can then take time and pride in your work and push boundaries on whichever direction you choose.

If you can work on greefield projects, you can choose what to focus on which is a rare luxury to have!

Quality largely depends on your domain knowledge

Being in the software quality business, quite literally, the bar is set relatively high for code quality which creates some good habits and opens up a lot of interesting realizations, too.

The biggest of all being that quality is never existing only in a vaccum, in terms of code “cleaniness or flexibility”, but, at the same time, it’s largely dependent on the domain knowledge you have and also sometimes on other less business-related goals, but almost non-functionals, such as performance, memory usage, reliability, etc.

If you know the domain you’re working on, and what you really want to achieve from the business perspective, then the right architecture for a given piece of functionality or extension will almost always be obvious: this is where the cracks can start showing when it comes to technical skill to actually realize that architecture and that’s where a lot of learning can be done! If you see something failing or becoming too convoluted, the need to simplify it so it can be efficient or so you can scale it easily or extend it easily, becomes a nice challenge in itself, but you need to go through the pains first to see what needs to happen.

If you then gain the skill to realize this more efficient version of your original visions, that’s when you know growth is happening and this has been something I had very rearely seen before, and do see now!

Focus on making things as good as possible always starting from a perspective of enabling quality based on your business needs and also technical requirements.

Optimize only when it hurts

What I also learnt “for real” but had always known in theory, is that optimizing things in a vaccum or stemming from a simple desire of “this can be made better”, almost always leads to wasted efforts because it’s easy to get side-tracked with pointless abstractions that end up make things harder in the long run.

It turns out that you’re not really going to touch that class hierarchy that you spent so much effort to simplify after all.

You need to optimize for: business needs first, readibility second, explicit maintainability third.

What I’ve witnessed first hand is that if you take business requirements into account from the get-go, you usually end up with code that’s already as readable and as maintainable as your understanding of the business which is good enough for most cases. Good enough is usually very good.

Only take the extra steps when needed.

As an example, we had some RAG pipeline that was importing some documents extremely fast, and we knew it was the smallest possible source of data we would ever have. Would it work when we scaled up?

Turns out it didn’t. We were getting our pod’s process killed with an Out of Memory error.

That’s when you dig deep and optimize.

Even then, do it slowly.

Every optimization introduces some level of complexity.

So add a layer, measure.

If needed, add one more. Measure.

Rinse and repeat.

LLMs are awesome!

I toroughly recommend using Claude 3.5 Sonnet + reading official documentation on whatever it is you’re doing.

Before the “real rise” of LLMs as useful work support tools (they were available much earlier than 2022, but they were considerably worse then), you’d need to use a combination of searching stackoverflow, asking senior devs on your team (if there are any!) and pattern match on top of your existing codebase.

You can still do all of these in 2024, but, main point is, today you don’t have to.

With some careful prompting plus a clear understanding of what you need to solve (this is the truly hard part that a lot of people get wrong!) you can get to 90-95% completion and you can put your efforts on nailing those last 5%. It’s not a “better Stackoverflow or google” and, unless you’re working on niche areas, you’re missing out if you don’t use it properly.

Leverage LLMs and you’ll get better at your job even without them! You’ll see things you’d miss before and you’ll train your architecture intuition just based on the fact that your brain will be free to work on “the real problems”, and before, those would be usually obfuscated by the tools and configuration issues that still plague 99% of modern projects.

Docker is still king!

More than ever, I can’t stop recommending Docker to people, and, ever since I’ve learnt it, I’ve drastically improved my own prototyping speed! The best way to uncover unknown unknows is to plow through them, but, if you have to keep experimenting on a branch, or if you’re afraid you’ll accidentally commit what you don’t want to where you don’t need, or if you are missing some key dependency, whatever, just use Docker.

A docker compose file will give you the perfect sandbox to test your hypothesis, makes it easy to share with your coworkers, you can even use it for your side projets almost “as-is” and it’s extremely flexible in terms of how close you can get to a real production setup.

Learn and use Docker if you haven’t yet. It’s more relevant now than ever before, especially with GenAI and LLMs on the rise, the demands for moving fast are only expected to increase, so, learn Docker.

Overall, 2024 was a great year for me, where I managed to extract A LOT of value from all the projects I’ve worked on, learned a ton about a lot of the internals of several tools, which is always super valuable and, quite excited for 2025, bring it on!